The Pressure to Perform

Content leaders at mid-market and enterprise technology companies face mounting pressure to deliver engagement and prove ROI on every content dollar. Simply producing more content isn’t enough. Content must rise above the noise, connect with skeptical technical buyers, and contribute directly to the revenue/sales pipeline.

The AI Promise

Tools like Microsoft Copilot, ChatGPT, and Claude offer a tempting solution. They generate outlines in seconds, turn briefs into drafts in minutes, and scale output without increasing headcount.

The Quality Wall

But after a few months of AI use, most content leaders encounter a limit—not of technology, but of quality. Review processes become bottlenecks. Senior editors spend hours reshaping AI drafts that hit the topic but miss the strategy. Technical reviewers flag outdated or incorrect claims confidently presented as current. Brand stewards notice a sameness across posts: accurate but generic, readable but uninspired.

The issue isn’t just technical correctness. It’s the absence of human voice, character, and judgment—the elements that transform information into insight. Audiences sense when content lacks a human touch. They may not know why, but they disengage. The result is content that fails to convert and doesn’t justify its cost.

“AI accelerates production but great content requires discernment.”

– Jonathan Chappelle, Director of Technology Strategy and Business Development, Prowess Consulting

The Agentic Trap

Prowess calls this the “agentic AI teammate trap”—the mistaken belief that AI can own outcomes like a human teammate. It can’t. AI lacks strategic context, business judgment, and accountability. When AI creates underperforming content, there’s no agent to answer for it.

The Prowess Solution

Abandoning AI isn’t the answer. The solution is a framework that keeps humans in control of strategy, brand, and credibility while letting AI handle research, drafting, and formatting. This article introduces that framework and takes it for a test drive.

The Core Principle: Not All Content Tasks Require Equal Human Oversight

Prowess views AI autonomy as a spectrum. High AI autonomy is at one end of the spectrum where the need for human control is small. Low autonomy on the other end means human oversight and control are required. High autonomy suits first drafts, research synthesis, and formatting. Medium autonomy works for outlines and section expansion. Low autonomy—meaning high human control—is essential for strategic messaging, executive communications, and sensitive technical claims.

Content teams should map each project to the right autonomy level using four criteria:

- Strategic importance: How closely does this content tie to revenue and brand?

- Technical complexity: How specialized is the knowledge required to understand the content?

- Audience sensitivity: How critical are tone and accuracy?

- Regulatory risk: What happens if the content is wrong?

A product feature blog post might warrant medium-high autonomy. A competitive battle card demands low autonomy and tight human oversight. Technical documentation falls in the middle but needs thorough human review.

This approach defines the Prowess philosophy: humans set the goals, AI accelerates execution. AI provides raw material; humans shape it for impact. Clear governance prevents drift toward overreliance and ensures speed where it’s safe.

Prowess built a framework to operationalize the autonomy principle. It combines two elements: agency dials that set AI’s role and autonomy level, and milestone review gates that uphold quality.

The Six Agency Dials: Setting AI Autonomy Levels

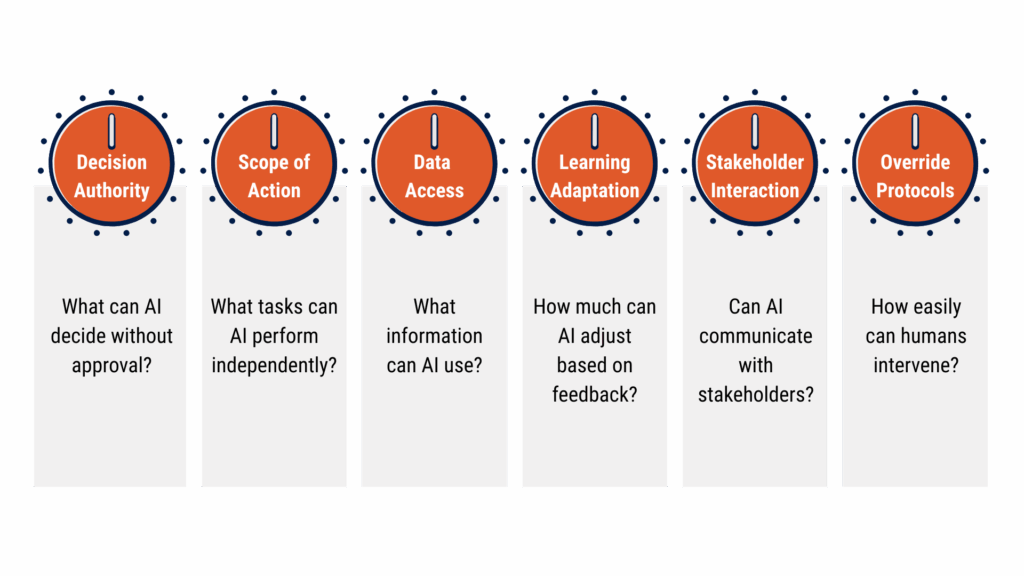

Different content types need different levels of oversight. Prowess uses six dials to calibrate AI’s control. These dials determine which review gates apply. Low-risk, high-autonomy content may skip some gates. High-stakes content requires more checkpoints.

The Four Milestone Review Gates

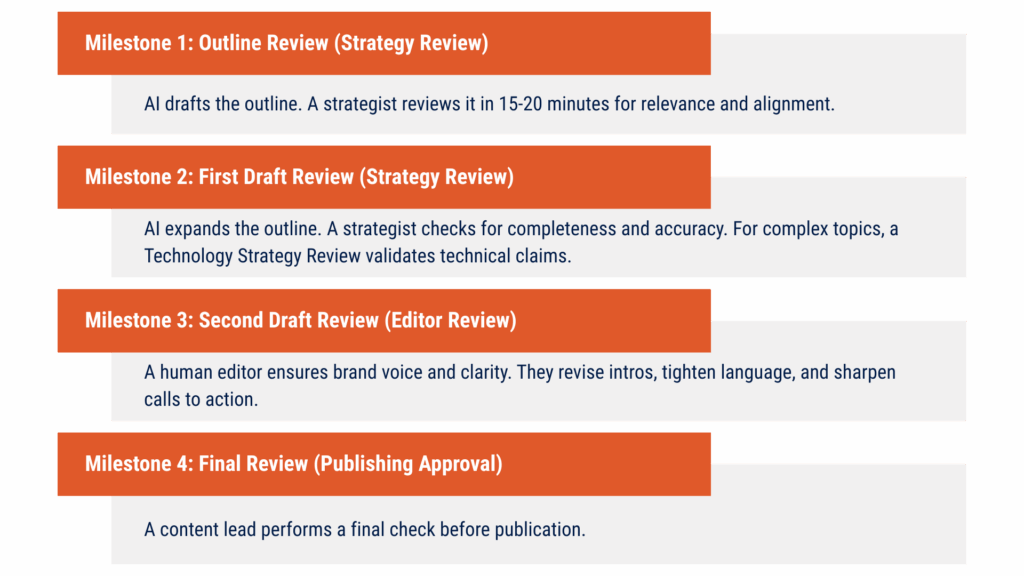

Traditional review models don’t fit AI-accelerated workflows. When AI drafts 2,000 words in seconds, waiting until the end to review causes problems. Milestone gates catch issues early—when fixes are fast and cheap.

Not all content needs all four gates. Agency dials guide which ones apply.

Prowess Consulting's Execution Model

Prowess deploys this framework through four roles:

- Content strategist aligns content with client goals and crafts AI prompts to preserve brand voice.

- Program manager oversees planning and ensures timely reviews.

- Editor maintains voice and readability at key milestones.

- Technical SMEs validate accuracy when required by agency dials.

This structure lets each expert focus on their specialty. AI handles drafting; humans ensure quality and strategy.

The Secret Sauce: Iteration

Even with strong human oversight, AI should not be asked to do more than it can do well. Short-form posts may be completed in one cycle. Long-form content works best in sections. This chunked approach keeps oversight focused and allows mid-course corrections. Iteration helps strategists maintain alignment with goals and voice.

AI accelerates production but great content requires discernment. Winning teams won’t replace humans with AI. They’ll assign each to the work they do best and build systems that respect both.

The Prowess framework boosts velocity without sacrificing quality. Reviewers focus on judgment, not grunt work. Strategic control stays with humans. Teams feel empowered, not displaced.

That’s the essence of human-led, agent-accelerated content.

Interested in Learning More?

- Want to learn more about agentic AI? Read: “How to Navigate the Agentic AI Teammate Trap” and “A Framework for Dialing In Agentic AI.”

- Need help assessing your content operations? Contact Prowess to schedule a content operations assessment.

Jonathan Chappelle

Related Posts

Episode 6: AI Agents for Manufacturing

Anuja explores how AI agents are transforming manufacturing—from smarter automation to new career opportunities—and shares insights on the skills and trends shaping the future of

Why Your Agentic AI Strategy Should Start at the Bottom, Not the Top

True AI transformation starts at the bottom. By empowering employees to automate everyday tasks, organizations unlock quick wins that scale naturally into enterprise-wide change.

Episode 5: From Corner Shop to Cloud: How AI Can Elevate the Future of SMBs

Monica French and Abbas Merchant discuss how AI empowers SMBs to work smarter, scale faster, and stay competitive in a rapidly changing digital world.