Artificial intelligence (AI) is turning up everywhere these days. We hear about Google using AI for image recognition, Apple using AI for self-driving cars, and even healthcare companies using AI for diagnosing patients or treating diseases.

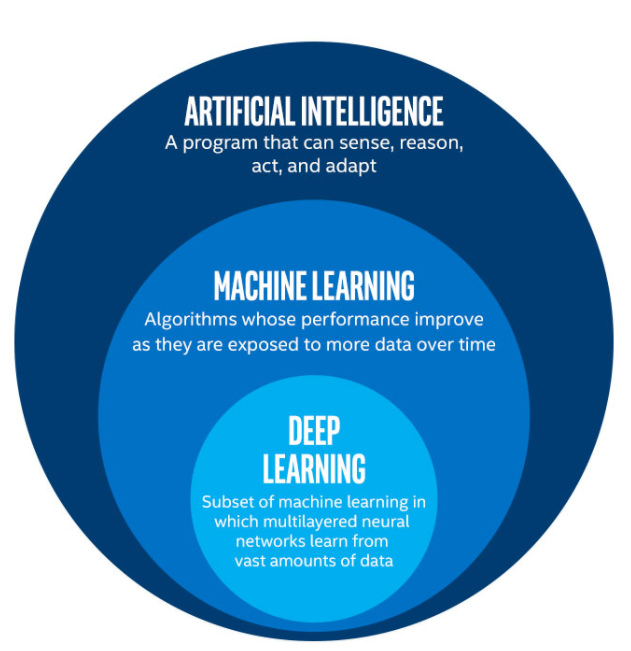

But what does AI really mean? And how is it different from machine learning (ML) and deep learning (DL)? Are there really differences between those terms?

Here at Prowess, we’ve been taking on more and more projects that touch on these technologies. In this post, I’ll pass on some of what we’ve learned about what the terms mean, how the technologies are changing our lives, and what hardware and software developments are enabling AI technologies to take off.

Artificial Intelligence

AI is an umbrella term that dates from the 1950s. Essentially, it refers to computer software that can reason and adapt based on sets of rules and data. The original goals for AI were to mimic human intelligence. Computers, from the outset, have excelled at performing complex calculations quickly; but until recently, they couldn’t always identify a dog from a fox or your brother from a stranger. Even now, computers frequently struggle to perform some tasks that humans do almost subconsciously. So why are some tasks so difficult for computers to perform?

As humans, our brains are collecting and processing information constantly. We take in data from all our senses and store it away as experiences that we can draw from to make inferences about new situations. In other words, we can respond to new information and situations by making reasoned assumptions based on past experiences and knowledge.

AI, in its basic form, is not nearly as sophisticated. For computers to make useful decisions, they need us to provide them with two things:

- Lots and lots of relevant data

- Specific rules on how to examine that data

The rules involved in AI are usually binary questions that the computer program asks in sequence until it can provide a relevant answer. (For example, identify a dog breed by comparing it to other varieties, one pair at a time. Or playing checkers by measuring the outcome of available moves, one at a time.) If the program repeatedly fails to determine an answer, the programmers have to create more rules that are specific to the problem at hand. In this form, AI is not very adaptable, but it can still be useful because modern processors are capable of working through massive sets of rules and data in a short time. The IBM Watson computer is a good example of a basic AI system.

Machine Learning

The next stage in the development of AI is to use machine learning (ML). ML relies on neural networks—computer systems modeled on the human brain and nervous system—which can classify information into categories based on elements that those categories contain (for example, photos of dogs or heavy metal songs). ML uses probability to make decisions or predictions about data with a reasonable degree of certainty. In addition, it is capable of refining itself when given feedback on whether it is right or wrong. ML can modify how it analyzes data (or what data is relevant) in order to improve its chances of making a correct decision in the future. ML works relatively well for shape detection, like identifying types of objects or letters for transliteration.

The neural networks used for ML were developed in the 1980s but, because they are computationally intensive, they have only been viable on a large scale since the advent of graphics processing units (GPUs) and high-performing CPUs.

Deep Learning

Deep learning (DL) is essentially a subset of ML that extends ML capabilities across multilayered neural networks to go beyond just categorizing data. DL can actually learn—self-train, essentially—from massive amounts of data. With DL, it’s possible to combine the unique ability of computers to process massive amounts of information quickly, with the human-like ability to take in, categorize, learn, and adapt. Together, these skills allow modern DL programs to perform advanced tasks, such as identifying cancerous tissue in MRI scans. DL also makes it possible to develop driverless cars and designer medications that are tailored to an individual’s genome.

ML and DL are Transforming Computing for Businesses and Consumers

Both ML and DL capabilities have been seeping into our daily lives over the last several years, with the rate of change accelerating recently. It seems as if AI, ML, and DL topics are appearing everywhere lately, from technology news stories to company marketing materials and websites. The technologies are undisputedly altering the applications and services landscapes for both enterprises and consumers.

For example, enterprise businesses can use ML and DL to mine much deeper into the data offered by social media, the Internet of Things (IoT), and traditional sources of customer and product data. By combining servers built on modern GPUs and CPUs with accelerated storage and networking hardware, companies can rapidly analyze massive quantities of data in near real time. ML and DL software can identify trends, issues, or opportunities for new products and services by learning over time which data is relevant and important for generating useful insights.

Consumers are benefiting from years of research and investment by Google, Amazon, Apple, Microsoft, and other big players. The personal assistants from these companies are battling for dominance in voice-activated control of online searches and control of apps, devices, and services. As a result, we can sit comfortably on our couches and use voice controls to check our emails and the weather, to start playing music, to purchase groceries, and to turn off the lights. We can also get much more accurate recommendations for music, books, news, and products based on our tastes and interests. AI is also integrated with virtual and augmented reality. Google has released software called Google Lens that lets you point your phone camera at an object to automatically identify it or take an appropriate action based on the target. For example, you could point the camera at a flower to find out its name, or point at a concert poster for an option to purchase tickets.

AI Use is Vast and Expanding

Related Posts

Best Practices for Dealing with Your Data—Wherever You Are in Your Analytics Practice

We believe that any data-centric organization can benefit from using hardware-based accelerators. But the tricky part is choosing the right one for the job. It

What Is Intel Innovation Engine and Why Does It Matter?

Intel has packed a bundle of new features onto its Intel Xeon Scalable processors, including the following: Intel Advanced Vector Extensions 512 (Intel AVX-512) Innovation

What Is Intel AVX-512 and Why Does It Matter?

Intel has packed a bundle of new features onto its Intel Xeon Scalable processors, including the following: Intel Advanced Vector Extensions 512 (Intel AVX-512) Innovation

Never miss a story

Subscribe to the blog and stay updated on Prowess news as it happens.