Whether you’re a large enterprise or a small business, you’ve probably been told that data is one of your organization’s more important resources. Fair enough. But if you’re not already actively using analytics to tap this resource, where do you start? While this blog post won’t teach you how to start an analytics process within your organization, it will provide some practical pointers on handling analytics whatever the size of your organization (or your institutional experience with them).[1]

1. Consider More than Just Simple Descriptive Statistics

Large datasets can be tough to handle, and whatever the data, you need a toehold into it to begin to explore it. But remember to move beyond simple, go-to descriptive statistics like mean or standard variation—they can mask important insights on your data and send you down blind alleys before you have even begun.

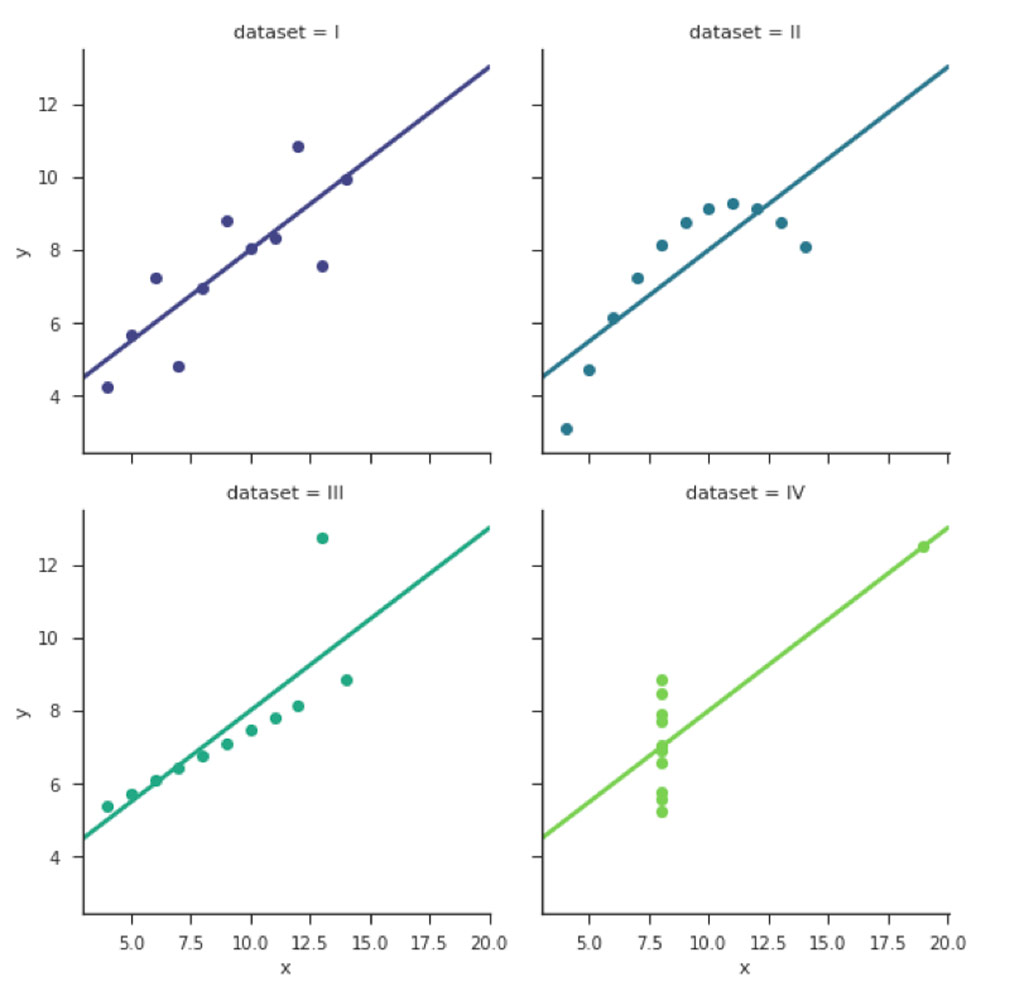

To prove this point, statistician Francis Anscombe generated four small bivariate datasets with identical (or nearly identical) descriptive statistics. Now known as Anscombe’s quartet, even though the datasets have the same mean and sample variance for their respective X and Y values (and even nearly the same Pearson correlation and line of linear regression), the huge differences in datasets becomes immediately clear once they are graphed.

Figure 1. Visualization of the four datasets in Anscombe’s quartet

The point is not that mean, median, and standard deviation are bad, but that they can only give you so much insight into unfamiliar datasets. Consider incorporating other fast methods of initial data inspection such as histograms, cumulative distribution functions (CDFs), and quantile-quantile (Q-Q) plots.

2. Visualize Your Data

The example of Anscombe’s quartet in the last point illustrates that sometimes the fastest way to get initial insights about the shape of your data is through visualization. At the same time, don’t limit your visualizations to back-of-the-envelope sketches like histograms or CDFs; more sophisticated visualizations like scatter-plot matrices, principal component analysis (PCA), or t-distributed stochastic neighbor embedding (t-SNE) analysis can help you make sense of multi-dimensional data and point you in the most fruitful directions for further investigation. Beyond just detecting structure in high-dimension data, visualization techniques like an Andrews plot can also help you detect outliers in your data.

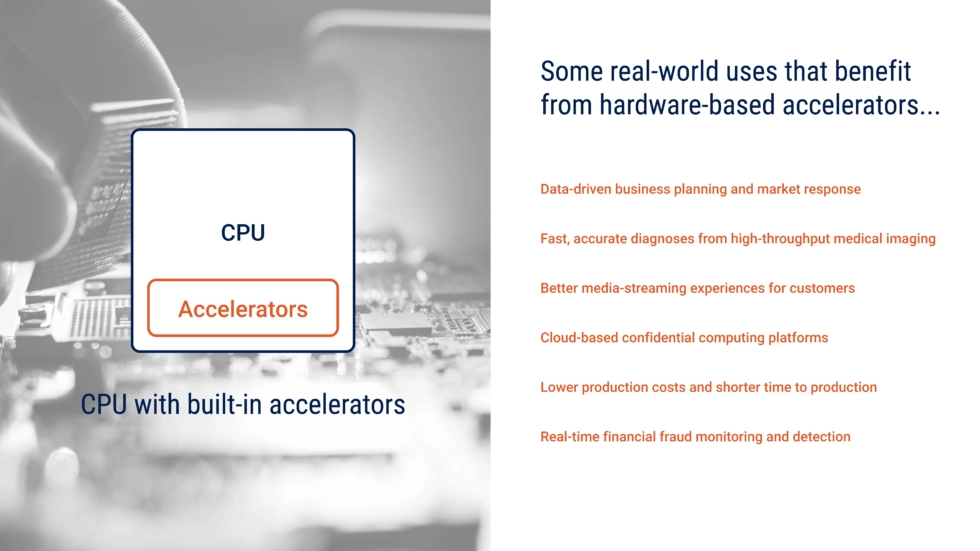

In short, built-in accelerators process data or complete tasks faster than general purpose devices, benefitting you by reducing time to solution, power consumption, and hardware and operating costs.

The slightly longer explanation is that modern workloads are sensitive to application latency and performance throttling, so they can benefit significantly from hardware-based acceleration. Here are a few examples of real-world benefits you can get from highly optimized workloads.

- Faster data analysis enables businesses to better adapt to rapidly changing market conditions.

- Accelerated simulations and business information modeling (BIM) help engineers and manufacturers reduce production costs and time to production.

- A faster machine learning (ML) pipeline can help financial institutions deploy real-time fraud monitoring and detection.

- Physicians can use high-throughput medical imaging to diagnose diseases quickly and accurately, meaning their patients can start treatment sooner.

- Smoother, faster media streaming increases customer satisfaction and retention for content delivery network (CDN) providers.

- Security acceleration can help healthcare organizations use a cloud-based confidential computing platform for patient records.

Figure 1. Use CPUs with built-in accelerators and be rewarded with real-world benefits

Choosing the Right Built-In Accelerator

We recommend keeping things simple—choose an accelerator based on your business goals. For example, are you looking to make the most of your existing infrastructure? If you are running workloads on Intel® Xeon® Scalable processors, we have good news. You can deploy some or all of the following built-in accelerators without having to purchase additional hardware:

- Intel® AI Engines are purpose-built to boost inference and training performance.

- Intel® HPC Engines enhance performance for the most demanding, compute-intensive workloads.

- Intel® Security Engines protect platforms, systems, and data without impacting performance.

- Intel® Network Engines accelerate load-balancing, compression, and vRAN for high-density computing platforms.

- Intel® Analytics help database and data-intensive workloads run faster and more efficiently.

- Intel® Storage speeds data movement and reduces latency for data storage platforms.

AMD EPYC is another data-center CPU that uses SoC acceleration technologies and AMD Instinct is a CPU+GPU hybrid processor chip.

Getting Started on Your Hardware-Based Accelerator Journey

We suggest you start by examining your current workload environment and workflow processes to determine where bottlenecks are occurring. Using a built-in accelerator may allow you to improve performance without changing your current infrastructure. With Intel Xeon Scalable processors, improved performance could be as simple as turning on a built-in accelerator with the right level of drivers or software-optimized code. You also might consider modernizing your data center for improved compaction, security, and power conservation by upgrading your hardware infrastructure with CPUs having built-in accelerators. Whatever type of accelerator you choose, we recommend standards-based hardware to help ensure your new accelerator integrates smoothly into existing infrastructures and is easy to configure and manage.

To Learn More

Read more about hardware acceleration in the following Prowess reports and articles:

To learn more about what we do at Prowess Consulting, view our latest research and follow us on LinkedIn.

1 Calculated as compound annual growth rate (CAGR) of 51 percent. “Hardware Acceleration Market Research Report – Global Forecast till 2030.” Market Research Future. September 2022. www.marketresearchfuture.com/reports/hardware-acceleration-market-8249.